Technology Is Not An End But Means To Make Customer Life Easier: Manu Saale

- By 0

- February 04, 2020

Mercedes-Benz R&D India (MBRDI), founded in 1996 in Bengaluru to support Daimler’s research, IT and product development activities, is now one of the largest global R&D centres outside Germany, employing close to 5000 skilled engineers and a valuable centre to all business units and brands of Daimler worldwide. The centre is also a key entity for Daimler’s future mobility solutions through C.A.S.E (Connected, Autonomous, Shared and Electric) for building autonomous and electric vehicles. The centre’s competencies in engineering and IT have progressed to using AI, AR, Big Data Analytics and other modern technologies to provide seamless connectivity. During an interaction with T Murrali, the Managing Director and CEO of MBRDI, Manu Saale, said, “The centre has been growing phenomenally. We have just started a team on cyber security. . . We have been helping to simulate some stack- related solutions using fuel cells. I’m waiting for a clear strategy from the company for a possible venture into the hydrogen path.” Edited excerpts:

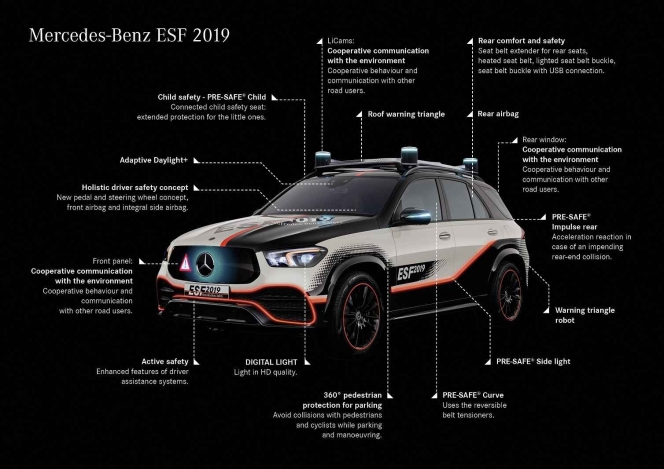

Q: You could begin with detailing the contribution of MBRDI to the Experimental Safety Vehicle (ESF)?

Saale: The ESF is a concept vehicle. We have taken a GLE platform and tried to predict technologies that are coming up and put its demo version inside. Some of them are just future technologies but they are strictly based on the data we have collected, and the accident research and digital trends that we have seen.

There is a worldwide safety theme, centred in Germany and India, which is studying all these data and statistics to predict how the future should look like. Mercedes-Benz has a history of building concept cars as mobility is changing around us. This time we have decided to put safety in perspective for the new age mobility with ESF2019. This time we have decided to put safety in perspective for the new age mobility.

For example, in a driverless car there is no steering wheel, so where will you put the air bags as it has been placed in the steering wheel. This means that the airbag concept will have to change. If you go white-boarding on this topic you will realise that some fundamental things you have been counting on all these years will change. This international team in Bengaluru supporting Germany has been working on many of these kind of concepts.

We have brought it here for two reasons. One is for the contribution from India. A lot of digital simulations have been done before implementing the hardware. Bengaluru has contributed to the digital evaluation of the new safety concepts in ESF. The other reason is to inspire the engineers to innovate further based on the first level of fantasies that we have created, and how it could be taken to the next level. These are the kind of things we want our engineers to think about; ESF is a pointer in that direction.

Q: What are the possible changes with the emergence of EVs and autonomous vehicles for safety?

Saale: Imagine not being able to predict the position of passengers when a crash happens. If they are sitting in a conference mode, facing one another other, how can they be protected without an airbag in their front? That’s one; second is the use of different materials within the car and the dynamics that could happen in an accident. Third is connection to the source of a fuel tank / pack, not specific to one place but probably spread across the floor of a car. The battery and its chemical components are also critical in a crash situation.

There are many new things when we think about safety in autonomous and electric vehicles; whereas connectivity plays into our hands. I don’t think the industry has exhaustively thought about what new dimensions can come from driving autonomous vehicles.

Q: What happens if the accident is so severe that all the electrical connections are cut off? Has any thought gone into this?

Saale: I am sure they have thought about it. An airbag can pop up in milliseconds; an SOS is message placed post crash. Today, in an instant, we can ping the world somehow, so information of position, latitude, etc is sent out immediately when an accident takes place. Of course it depends a lot on the emergency services and collision response in the country.

Q: What is the role played by MBRDI in the development of Artificial Intelligence (AI) and Augmented Reality (AR)?

Saale: This is the new age digital; we don’t have to go back to the old world of software alone. Digital has shown new potential in the last few years and we have tried to keep pace with the current trends. AI is certainly one of the buzz words that is coming up.

MBUX, which we flagged off in Bengaluru a few weeks ago, showcases how AI could be used as a technology to make customer life easier in the car. We look at all the use cases to find out what the customer does in a car.

For example, use of camera in a car. During night driving if the driver extends his hand to the vacant seat next to him looking for something, and if it is dark, the camera will sense that he is seeking something and switch on the lights. We need AI for that because we have to understand the hand position and the amount of stretch done; it should not be confused with the driver stretching himself after yawning. Such a simple use case requires a lot of technology. These are things where people look at customer behaviour and say ‘technology is not for the sake of technology but to make customer life easier.’

Q: The Tier-1 companies spread across Germany have come up with many futuristic solutions for vehicles. They have their own research centres. So what is the role of R&D centres of OEMs like this other than integration?

Saale: Every centre has to ride its own destiny. Even if we are a GIC we cannot expect HQ to hold our hand for ever. It’s a typical parent-child relationship and not a customer-supplier one. We have seen all the combinations of GICs working out there in the market. I think we have a good success story here. That is the value-add GIC has to think about.

A survey was done on the value-add from GICs; they used the word entrepreneurship from GICs. It was found that only 6 percent of GICs were entrepreneurial, that were really able to innovate. We were also named in that top 6 percent. It depends on the company culture, relationships, handling discussions with HQ and the local leadership teams. That’s the challenge in a GIC compared to a profit centre that is looking from one customer to another.

Q: You are also in touch with suppliers in India and across the globe for necessary hand-holding?

Saale: Absolutely, imagine a situation where the parents trust the child completely.

Q: You will be the parent and Tier-1s the children?

Saale: No, it is not that way. We behave as Daimler when we talk to Tier-1s. We tell them that ‘you know the car well, so do it by yourself and deliver the product.’ That’s the level of maturity in interaction that one can reach.

Q: When it comes to electronics, OEMs the world over are faced with many regulations. Do you see options for them to comply with all the regulations considering the amount of electronics coming into the car?

Saale: Every new thing is a technical challenge on the table. It can be stricter emission norms or features and functionalities that are difficult to reach, a technical compliance issue that crops up every now and then, and a safety or parking aspect that is covered by many regulations around the world. We thrive on such challenges that have pushed a company like Mercedes to keep on inventing because, among many other things, hardware is getting cheaper and smaller, software capabilities are growing, connectivity is increasing, computing external to the car is possible, and so many other things. OEMs are dealing with authorities, trying to handle what is possible at lower cost, because at the end of the day we have to sell. I am sure that regulators and societies around the world today are looking for some balance between technology and cost.

Q: How do you manage multiple sensors in the vehicle?

Saale: Digital appears to be very complex now but electronics will go through its life cycle and come to a point where man understands its complexity and is able to put it all together. Today, we are talking about sensor fusion - putting together the net of information and seeing it as a whole through various sensors.

Functionalities could range from a switch to radar or lidar with their spectrum of signals, to give various resolutions; the processing capability would be in milliseconds. The more we comprehend the mixed bag of signals we get the better will be our ability to make right decisions.

Q: With all the facilities that you provide to the driver, are you not actually deskilling him?

Saale: The trend is that people don’t want to get into the hassles of driving a vehicle. Driving is stressful and cumbersome to many which is why the autonomous car would gain popularity. The driver has to just punch in where he/she has to go and the vehicle will do it automatically, saving both mental and physical tension. A completely new user base is being introduced into mobility with software features. We have to look at it positively.

Q: Are you also working on cyber security, on things that get into the car?

Saale: We have just started a team now. Our focus on cyber security is at a centre in Tel Avi, Israel.

Q: Do you see scope to improve the thermal efficiency of Internal Combustion (IC) engines further?

Saale: I think the capability, from an engineering perspective, exists to take the IC engine to the next level. The potential continues to be there and all OEMs talk about it. Possibly it is getting affected by the social and environmental aspects.

Q: It is said that the exhaust from a Euro-6 engine is far better than the atmospheric air in many highly polluted cities and it is not actually polluting. What is your opinion?

Saale: It is true. But people say if electricity is generated from coal then aren’t we contributing to pollution? If we localise electric production to one area with everything contained then it would give us better scope to control it rather than spewing it out of every vehicle tail-pipe in all over the world.

Imagine millions of polluting vehicles moving around compared to millions of electric, which don’t have any tail-pipe emissions, with electricity generated by coal that is centralised; it would be a completely different technical and logistic challenge from the environmental point of view. Regulators, politicians and policy makers are all giving their views on this issue; the improvement in living standards and the coming up of smart cities would affect it. I think we are moving in the right direction with the greening of the environment covering everything. I see this sustainable city living much better pictured with electric moving around me.

Q: Can you tell us about the work done around IoT?

Saale: We are working on digitalisation of our production in many ways. One of the teams for Manufacturing Engineering in Bengaluru focuses on digital methods in manufacturing such as production planning, supply chain, logistics and IoT. The team also works on front-loading of production planning.

Q: What is your contribution to the Sprinter F-CELL, the fuel cell application, that replaced the diesel engine?

Saale: We have been helping to simulate some stack- related solutions using fuel cells. I’m waiting for a clear strategy from the company for a possible venture into the hydrogen path. (MT)

Multimatic Installs First VI-grade HyperDock System In North America

- By MT Bureau

- February 20, 2026

VI-grade has announced the installation of its HyperDock cockpit at Multimatic’s Vehicle Dynamics Centre in Novi, Michigan. This deployment marks the first instance of HyperDock technology in North America. The system upgrades an existing DiM250 driving simulator, installed in 2020, into a platform capable of simultaneous vehicle dynamics and NVH (Noise, Vibration, and Harshness) development.

The HyperDock consists of a carbon-fibre cockpit designed to increase stiffness and reduce inertia. By removing the traditional top disk in favour of a direct actuator interface and integrated vibro-acoustic feedback, the system allows engineers to assess ride, handling and acoustics within a single environment.

The upgrade introduces ‘full-spectrum’ simulation, which bridges the gap between high-frequency vibration testing and low-frequency motion cues.

- Construction: Lightweight carbon-fibre frame.

- Interface: Direct actuator connection to minimise signal delay and mechanical loss.

- Feedback: Integrated tactile and audio systems for vibro-acoustic realism.

- Application: Simultaneous tuning of vehicle handling and interior cabin noise.

Peter Gibbons, Technical Director – Vehicle Dynamics, Multimatic, said, “After evaluating the VI-grade HyperDock Full Spectrum Simulator cockpit at the SimCenter Udine over a year ago, Multimatic quickly realized that it would provide a significant step forward in the fidelity of all DiM applications, from road car ride tuning to race car limit handling. The overwhelmingly positive responses from Murray White, Technical Director of Vehicle Development at Multimatic, and Dirk Müller, professional race car driver, affirmed Multimatic’s decision to upgrade to HyperDock. The added immersion, superior tactile feedback, and audio advancements have moved the goalposts well beyond our expectations. Multimatic looks forward to continuing to leverage the impressive capabilities of HyperDock over the coming years.”

Alessio Lombardi, Global Sales Director – Simulation, VI-grade, added, “With the addition of HyperDock, Multimatic now benefits from full-spectrum simulation capability, expanding the scope of development activities that can be performed on an already well-established simulator platform. This installation represents an important milestone for VI-grade, as it brings HyperDock technology to North America for the first time.”

- ADAS 2026 Show

- Autonomous

- Driving

- Developments

- Magna

- Mobileye

- Renault

- Ampere

- Mobileye

- Tata Motors

- ARAI

- Aayera

- demo

- live

- conference

- inauguration

- automotive

ADAS 2026 Show Looks At Autonomous Driving Developments

- By Bhushan Mhapralkar

- February 20, 2026

Postponed from December 2025 to February 2026, the ADAS Show 2026 by Aayera was a combination of stalls where diverse players from the field of ADAS or autonomous driving highlighted their latest developments. There were live demo sessions that saw the use of passenger vehicles, trucks and dummies to highlight the technological prowess in the field.

Held at ARAI’s newest testing and certification facility for ADAS and other modern automotive technologies at Takwe (Pune), the show saw experts speak about the autonomous future in panel discussions, presentations etc. Live demos highlighted progress on the computing and vision front; on the software front, underlining certain zest.

In his inaugural address, Dr Reji Mathai, Director, ARAI, spoke about the motive behind setting up an ADAS testing facility at Takwe. Observing that tracks never give returns to draw attention to the decision of setting up an ADAS testing track at Takwe (the newest yet by ARAI), Dr Mathai informed that ARAI participates at the UN level in regulation forming.

Dr Mathai; Elie Luskin, Vice President – India and China, Mobil Eye, and Nina Roeck, Vice President – Software Engineering (Drive & Comfort), Ampere (Renault Group), were united in their expression about India’s unique traffic and driving conditions. The trio stressed on localisation of ADAS system parts such as sensors; on local engineering and development, and on local testing and validation.

“In India, the conditions are different and the effort therefore is to focus on perception, alerts and interventions that consider the local driving condition,” said Roeck.

Asserting that India’s expanding auto market has disproportionately low ADAS. Luskin explained, “ADAS would become mainstream as India’s GDP per capita grows.

Apurbo Kirty, Head – Electrical & Electronics, ERC, Tata Motors, focused on advanced driver assistance in CVs in his address. He referred to road challenges in India, road accident statistics and the challenges for ADAS implementation in terms of SAE autonomy levels, regulations like GSR 834 and how ADAS is a necessity rather than just a tech upgrade.

Touching on the complexity of landscape of Indian road conditions, Abijit Sengupta, Head of Business – SAE and India, HERE Technologies, spoke about vehicle safety trends such as connected vehicles, autonomous, shared services and electrification.

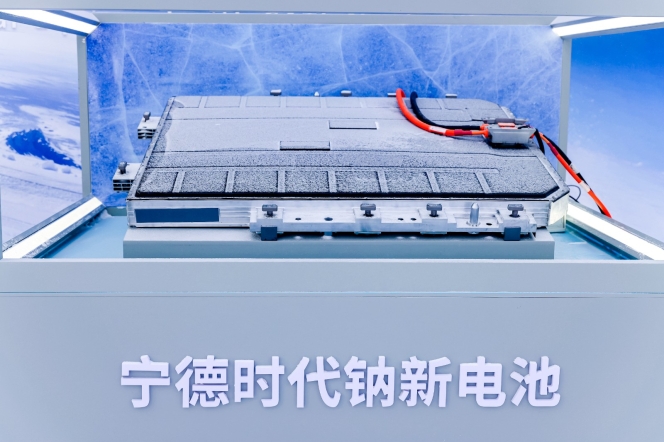

Changan And CATL Launch Mass-Production Sodium-Ion Battery Vehicle

- By MT Bureau

- February 19, 2026

Changan Automobile and CATL have unveiled the first mass-production passenger vehicle equipped with sodium-ion batteries. The vehicle, showcased at the 'Changan SDA Intelligence Milestone Release', is scheduled for market release by mid-2026. CATL, acting as the strategic partner for the project, will supply its Naxtra sodium-ion batteries across Changan’s brands, including Avatr, Deepal, Qiyuan and UNI. The partnership introduces a dual-chemistry approach to the market, utilising sodium-ion alongside lithium-ion technologies.

CATL's Naxtra battery reaches an energy density of 175 Wh/kg. Utilising a Cell-to-Pack system and a battery management system (BMS), the technology provides a range exceeding 400 km. Future iterations are projected to reach 500–600 km for battery electric vehicles (BEVs) and 300–400 km for hybrids.

The technology is designed for operation in cold climates. At –30deg Celsius, the battery delivers triple the discharge power of lithium iron phosphate (LFP) alternatives. It maintains 90 percent capacity retention at –40deg Celsius and continues to function at –50deg Celsius. Safety testing, including drilling and crushing, resulted in no smoke or fire.

The global sodium-ion battery market is forecast to grow from USD 1.39 billion in 2025 to USD 6.83 billion by 2034. To support adoption, CATL plans to establish over 3,000 Choco-Swap battery swap stations across 140 cities in China by the end of 2026, with a focus on northern regions.

The launch follows a decade of research. Since 2016, CATL has invested nearly 10 billion RMB (USD 1.45 billion) into sodium-ion technology, developing approximately 300,000 test cells. The project was supported by a dedicated team of 300 personnel to ensure scalability and performance.

Gao Huan, CTO of CATL's China E-car Business, said, "The arrival of sodium-ion technology marks the beginning of a dual-chemistry era. Changan's vision shows both its responsibility for energy security and its strategic foresight. Much as it embraced electric vehicles years ago, Changan is once again taking the lead with its sodium-ion roadmap. At CATL, we value the opportunity to work alongside such an industry leader and fully support its strategy, combining our expertise to bring safe, reliable and high-performance sodium-ion technology to market."

drivebuddyAI Demonstrates Scalable ADAS Platform At India’s First ADAS Test Track

- By MT Bureau

- February 18, 2026

Following its international unveiling at CES 2026, drivebuddyAI, a leading innovator in AI-powered Advanced Driver Assistance Systems (ADAS) and Driver Monitoring Systems (DMS), recently demonstrated its technology at the ARAI ADAS Test City. The company presented its range of vision-based Advanced Driver Assistance and Driver Monitoring Systems, focusing on their reliability in the varied and challenging conditions typical of Indian roads.

Live demonstrations were conducted using a heavy commercial vehicle to showcase the platform's versatility in meeting various compliance standards. A single, integrated hardware and software setup, utilising a fused network of cameras for 360-degree perception, executed multiple test scenarios simultaneously. These included a driver monitoring system that detects drowsiness, distraction and seatbelt usage in line with both Indian and European regulations. Further tests illustrated the vehicle's ability to warn of pedestrians moving into its path, identify potential collisions with cyclists in blind spots and issue forward collision warnings by combining radar and camera data.

Beyond merely fulfilling test requirements, the demonstrations highlighted practical applications that extend into everyday driving situations. This focus on real-world functionality is backed by extensive validation, with the company's systems having analysed nearly four billion kilometres of driving data. This has reportedly led to significant safety improvements, including a marked decrease in incidents caused by driver fatigue and a substantial reduction in overall fleet risks.

Currently validated for commercial vehicles against India's AIS-184 standard and Europe's stringent General Safety Regulation and Euro NCAP protocols for 2026, the technology is also adaptable for passenger cars. This scalability offers automotive manufacturers and their suppliers a pathway to not only meet but surpass upcoming global safety mandates. By refining its AI through extensive fleet operations over billions of kilometres before adapting it for original equipment manufacturer compliance, drivebuddyAI aims to deliver a mature, rigorously tested product that ensures an enhanced user experience.

Nisarg Pandya, CEO and Founder, drivebuddyAI, said, “ADAS Test City from ARAI is a great initiative, and we value participating in a format where we can showcase live demonstrations to a large audience together on the vehicle. This time, the turnout was significant and provided a strong opportunity to establish drivebuddyAI as one of the key players in the upcoming OEM compliance requirements. The engagement and response we received were phenomenal, reinforcing both the market need and the industry’s confidence in our solutions. The upcoming ADAS-compliant vehicles must have technology that works in Indian scenarios to achieve meaningful safety improvements and reduce fatalities.”

Comments (0)

ADD COMMENT